Part I: Collect Everything via Integration

At some point, an organization may want to consider Machine Learning, especially for those operating in a Business to Consumer (B2C) environment, but possibly for some Business to Business (B2B) applications. Stanford University defines Machine learning as the science of getting computers to act without being explicitly programmed.

Many organizations don’t realize the benefits of Machine Learning. It sounds intimidating, the stuff of collegiate scholars, and so companies may avoid what is an increasingly rote path into taking the value-added experience for customers to a new level.

Machine Learning is nearly ubiquitous in modern online life. Consider, you search for something on Amazon. Later, similar products and ads are recommended to you on Facebook.

What do I need to collect?

When it comes to building product recommendation systems, everything is relevant. Some of the more advanced uses of Machine Learning consume more obscure data points, and are tougher to immediately identify what exactly will have predictive value.

One of the cooler and more arcane Machine Learning projects collected a variety of data points culled from the complete seasons of AMC’s The Walking Dead to try and predict personality traits best suited to survive that particular kind of zombie apocalypse. Many of their data points were extremely subjective, and compared to rote uses, arcane, and the modeling hasn’t matched the oft capricious nature of the show’s writers. Still, it makes a profound point: creating useful algorithms to predict behaviors in a narrow set of uses is easier than ever.

With product recommendation systems, however, it’s a little less oblique than survival factors. Key product identifying data has profound predictive use: product identifiers, categories, descriptions, keywords, price.

Linking user identity is important. If your e-commerce system doesn’t have links that capture social media identity, consider building that, as you can tap in to a number of tangential data points. At the very least, capture user identity as intrinsic to your e-commerce platform.

If your product has a rating system, pull in that data as well. It’s not a pursuit that will necessarily yield immediate results, but eventually, rating behaviors can help you predict, as you advance your ML capabilities, quality standards of a customer at given price points.

Finally, you need to capture behaviors. What was viewed, how often it was viewed, how long it was viewed.

How do I collect this data?

Much of this data will already be logged. If you are hosting your system in the Microsoft stack, standard Internet Information Services logging captures a good amount of data that can be extracted. In the Apache stack, the APIs for Apache Commons Logging are quite comprehensive. JBoss application servers, too. The commonality among all of them is that building “data shippers” has never been easier.

I’ve seen some innovative implementations that take pixel tracking to the next level and assign individual pixels as shorthand for larger sets of data. This is especially useful in load-balanced, large-volume implementations, where complex logging integration could easily become a point of failure for an application, or the rendering of tracking data itself a bottleneck.

In the better implementations using open-source products like PredictionIO or the Elastic Stack (formerly known as Elasticsearch ELK), standing up low-cost, clustered servers for logging and analytics is more approachable than it has ever been.

Okay, so how do I structure the data that I store?

Whatever solution you choose, JSON (JavaScript Object Notation) is the tie that binds.

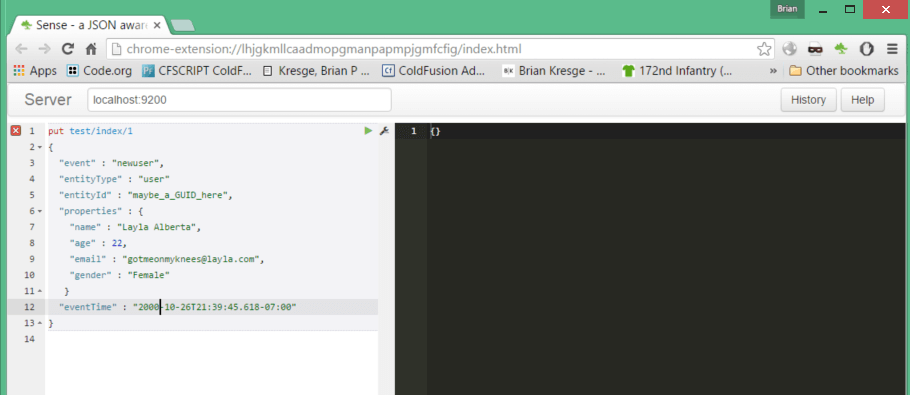

Figure 1 - Sense is a Chrome Extension that lets you easily interface with Elasticsearch, providing an example of the simple means of updating an event log and the kinds of data we might store for that event.

Figure 1 - Sense is a Chrome Extension that lets you easily interface with Elasticsearch, providing an example of the simple means of updating an event log and the kinds of data we might store for that event.

Couple of key points:

- Put a timestamp with each event.

- Use consistent values in attributes. Use enumerated values for gender. Use consistent formatting, as well, like with phone numbers, state names or abbreviations, and zip codes.

- Don’t store data in a way that makes it cumbersome to parse later, like binary values. If you need to put in a subset of data, use the proper JSON hierarchy (see the “properties” value in Figure 1 on how to add subordinate dimensions to JSON).

All right, what about ERP integration in the collection process?

Building meaningful data logging and access is a cumbersome process, even as it has become more approachable for small and mid-sized business.

For many of RKL eSolutions’ customers, inventory, pricing, and orders are managed in ERP, with most transactions batched to and from the web e-commerce platform.

Additionally, the systems behind good Machine Learning, like Elastic, have a value beyond building product recommendations, especially in sales and marketing analytics. As such, your data collection will need to blur the lines between how your company and customer relate to your product and sales order history data specifically. You probably internally categorize your products differently than you do in your web presentation, and for you to effectively harness the full potency of Machine Learning, you’ll probably want to store both. And since you don’t maintain both in one place that data will need to be collated before it is logged.

Real-time logging at this magnitude and complexity becomes unapproachable in large-volume environments, so a data warehousing scheme becomes very important. It’s also prohibitive when serving up a viable mobile sales experience, where you’ll want to reduce the number of sequenced events taking place behind the scenes.

There are a variety of ways—including custom solutions developed by RKL (ServiceQueue)—to bridge the gap between ERP and an environment conducive to Machine Learning efforts. In this series, we will use Node.js for cross-platform compatibility and ease of hasty development.

We won’t delve into what to do with data once you have it in this article, but broadly, you will need to build integration points for it to be subsequently consumed by either your internal or external customers.

Put your data to use for you!

With the low cost of storage, the expense of these systems are mitigated by open source and the brain trust available through vendors like RKL eSolutions, today is the day to get started collecting.

Even if you don’t have a strategy to consume the data you collect, just having a strategy to collect the data itself is a strong foot forward. Downstream from the effort of Machine Language data collection are enhanced email marketing capabilities, better social media sales points, stronger relationships to your customers, and an increasingly objective set of metrics for your sales and marketing efforts.

It’s your data, make it work for you!

In the next part, we’ll configure a development environment for testing using Elastic.

To learn more about using leveraging Machine Learning with your ERP solution contact RKL eSolutions.